Run Snyk scans and ingest results

This workflow describes how to ingest Snyk scan results into a Harness pipeline. STO supports the following scan modes for the following Snyk products:

- Snyk Open Source

- Snyk Code

- Snyk Container

- Snyk infrastructure as Code (currently in beta)

Important notes for running Snyk scans in STO

-

Snyk Code and Snyk Container scans require a Snyk API key. You should create a Harness secret for your API key.

-

For an overview of recommended Snyk workflows, go to CI/CD adoption and deployment in the Snyk documentation.

-

If you're scanning a code repository, note the following:

-

In some cases, you need to build a project before scanning. You can do this in a Run step in your Harness pipeline.

For specific requirements, go to Setup requirements for AWS CodePipeline in the Snyk documentation. These requirements are applicable to Harness pipelines as well as AWS CodePipeline.

-

Harness recommends that you use language-specific Snyk container images to run your scans.

-

For complete end-to-end examples, go to:

-

-

If you're scanning a container image, note the following:

-

Container image scans require Docker-in-Docker running in Privileged mode as a background service.

-

For a complete end-to-end example, go to Scan a container image: workflow example below.

-

Snyk Open Source orchestration example

This example uses a Snyk step in orchestration mode, which runs snyk test and snyk monitor and then ingests the results.

-

Add a codebase connector that points to the repository you want to scan.

-

Add a Security Tests or Build stage to your pipeline.

-

Add a Snyk security step to ingest the results of the scan. In this example, the step is configured as follows:

- Scan Mode = Orchestration

- Target Type = Repository

- Target Name = (user-defined)

- Variant = (user-defined)

- Access Token =

<+secrets.getValue("snyk_api_token")>(Harness secret)

-

Apply your changes, then save and run the pipeline.

Snyk Open Source ingestion example

The following example uses snyk test to scan a .NET repository.

The scan stage in this pipeline has the following steps:

-

A Run step installs the build; then it scans the image and saves the output to a shared folder.

-

A Snyk step then ingests the output file.

-

Add a codebase connector to your pipeline that points to the repository you want to scan.

-

Add a Security Tests or Build stage to your pipeline.

-

Go to the Overview tab of the stage. Under Shared Paths, enter the following path:

/shared/scan_results. -

Add a Run step that runs the build (if required), scans the repo, and saves the results to the shared folder:

-

In the Run step Command field, add code to build a local image (if required) and save the scan results to the shared folder.

In this example, we want to scan a .NET repository. The setup requirements topic says: Build only required if no packages.config file present. The repo does not contain this file. Enter the following code in the Command field:

# populates the dotnet dependencies

dotnet restore SubSolution.sln

# snyk Snyk Open Source scan

# https://docs.snyk.io/snyk-cli/commands/test

snyk --file=SubSolution.sln test \

--sarif-file-output=/shared/scan_results/snyk_scan_results.sarif || true

snyk monitor --all-projects | true -

For the Run step Image, use a supported Snyk image based on the type of code in your codebase.

-

In the Run step Environment Variables field, under Optional Configuration, add a variable to access your Snyk API key:

SNYK_TOKEN=<+secrets.getValue("snyk_api_token")>` -

In the Run step > Advanced tab > Failure Strategies, set the Failure Strategy to Mark as Success.

This step is required to ensure that the pipeline proceeds if Snyk finds a vulnerability. Otherwise, the build will exit with an error code before STO can ingest the data.

-

-

Add a Snyk security step to ingest the results of the scan. In this example, the step is configured as follows:

- Scan Mode = Ingestion

- Target Type = Repository

- Target Name = (user-defined)

- Variant = (user-defined)

- Ingestion =

/shared/scan_results/snyk_scan_results.sarif

-

Apply your changes, then save and run the pipeline.

Snyk Code ingestion example

The following example uses snyk code test to scan a .NET repository.

The scan stage in this pipeline has the following steps:

-

A Run step installs the build; then it scans the image and saves the output to a shared folder.

-

A Snyk step then ingests the output file.

-

Add a codebase connector to your pipeline that points to the repository you want to scan.

-

Add a Security Tests or Build stage to your pipeline.

-

Go to the Overview tab of the stage. Under Shared Paths, enter the following path:

/shared/scan_results. -

Add a Run step that runs the build (if required), scans the repo, and saves the results to the shared folder:

-

In the Run step Command field, add code to build a local image (if required) and save the scan results to the shared folder.

In this example, we want to scan a .NET repository. The setup requirements topic says: Build only required if no packages.config file present. The repo does not contain this file. Enter the following code in the Command field:

# populates the dotnet dependencies

dotnet restore SubSolution.sln

# scan the code repository

snyk code test \

--file=SubSolution.sln \

--sarif-file-output=/shared/scan_results/snyk_scan_results.sarif || true -

For the Run step Image, use a supported Snyk image based on the type of code in your codebase.

-

In the Run step Environment Variables field, under Optional Configuration, add a variable to access your Snyk API key:

SNYK_TOKEN=<+secrets.getValue("snyk_api_token")>` -

In the Run step > Advanced tab > Failure Strategies, set the Failure Strategy to Mark as Success.

This step is required to ensure that the pipeline proceeds if Snyk finds a vulnerability. Otherwise, the build will exit with an error code before STO can ingest the data.

-

-

Add a Snyk security step to ingest the results of the scan. In this example, the step is configured as follows:

- Scan Mode = Ingestion

- Target Type = Repository

- Target Name = (user-defined)

- Variant = (user-defined)

- Ingestion =

/shared/scan_results/snyk_scan_results.sarif

-

Apply your changes, then save and run the pipeline.

Snyk Container ingestion example

This example uses snyk container test to scan a container image. The scan stage consists of three steps:

-

A Background step that runs Docker-in-Docker as a background service in Privileged mode (required when scanning a container image).

-

A Run step that scans the image and publishes the results to a SARIF file.

-

A Snyk step that ingests the scan results.

-

Add a Security Tests or Build stage to your pipeline.

-

Add a Background step to the stage and set it up as follows:

- Dependency Name =

dind - Container Registry = The Docker connector to download the DinD image. If you don't have one defined, go to Docker connector settings reference.

- Image =

docker:dind - Under Optional Configuration, select the Privileged option.

- Dependency Name =

-

Add a Run step and set it up as follows:

-

Container Registry = Select a Docker Hub connector.

-

Image =

snyk/snyk:docker -

Shell = Sh

-

Command — Enter code to run the scan and save the results to SARIF:

snyk container test \

snykgoof/big-goof-1g:100 -d \

--sarif-file-output=/shared/scan_results/snyk_container_scan.sarif || trueSnyk maintains a set of snykgoof repositories that you can use for testing your container-image scanning workflows.

-

Under Optional Configuration, select the Privileged option.

-

Under Environment Variables, add a variable for your Snyk API token. Make sure that you save your token to a Harness secret:

SNYK_TOKEN =

<+secrets.getValue("snyk_api_token")> -

In the Run step > Advanced tab > Failure Strategies, set the Failure Strategy to Mark as Success.

This step is required to ensure that the pipeline proceeds if Snyk finds a vulnerability. Otherwise, the build will exit with an error code before STO can ingest the data.

-

-

Add a Snyk step and configure it as follows:

- Scan Mode = Ingestion

- Target Type = Container Image

- Target Name = (user-defined)

- Variant = (user-defined)

- Ingestion =

/shared/scan_results/snyk_container_scan.sarif

-

Apply your changes, then save and run the pipeline.

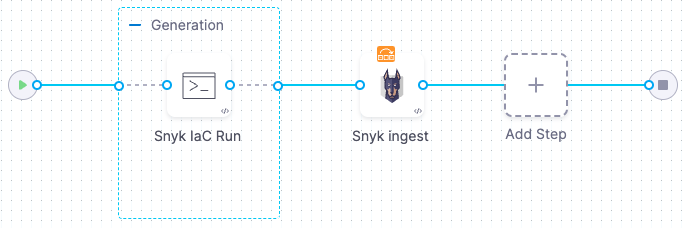

Snyk Infrastructure as Code ingestion example

Support for Snyk scans of IaC repositories is a beta feature. For more information, contact Harness Support.

The following example uses snyk iac test to scan an IaC code repository.

The scan stage in this pipeline has the following steps:

-

A Run step installs the build; then it scans the image and saves the output to a shared folder.

-

A Snyk step then ingests the output file.

-

Add a codebase connector to your pipeline that points to the repository you want to scan.

-

Add a Security Tests or Build stage to your pipeline.

-

Go to the Overview tab of the stage. Under Shared Paths, enter the following path:

/shared/scan_results -

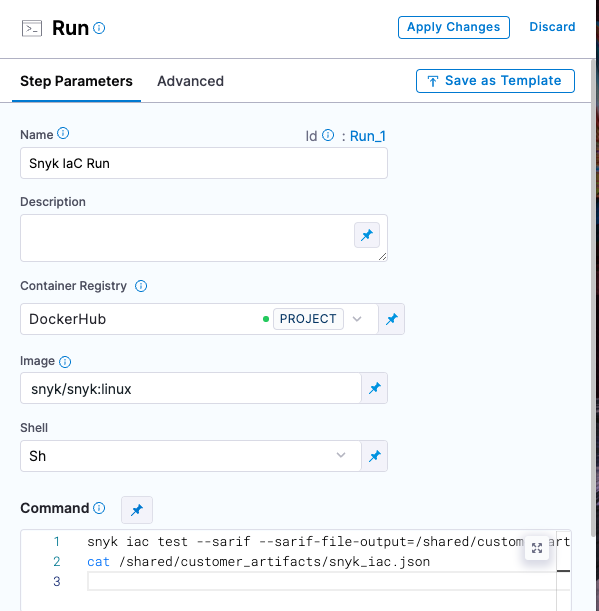

Add a Run step that runs the build (if required), scans the repo, and saves the results to the shared folder:

-

In the Run step Command field, add code to run the scan and save the scan results to the shared folder.

snyk iac test --sarif --sarif-file-output=/shared/scan_results/snyk_iac.json /harness || true

cat /shared/scan_results/snyk_iac.json -

For the Run step Image, use a supported Snyk image based on the type of code in your codebase.

-

In the Run step Environment Variables field, under Optional Configuration, add a variable to access your Snyk API key:

SNYK_TOKEN=<+secrets.getValue("snyk_api_token")>`Your Run step should now look like this:

-

In the Run step > Advanced tab > Failure Strategies, set the Failure Strategy to Mark as Success.

This step is required to ensure that the pipeline proceeds if Snyk finds a vulnerability. Otherwise, the build will exit with an error code before STO can ingest the data.

-

-

Add a Snyk security step to ingest the results of the scan. In this example, the step is configured as follows:

- Scan Mode = Ingestion

- Target Type = Repository

- Target Name = (user-defined)

- Variant = (user-defined)

- Ingestion =

/shared/scan_results/snyk_iac.sarif

-

Apply your changes, then save and run the pipeline.

Snyk pipeline examples

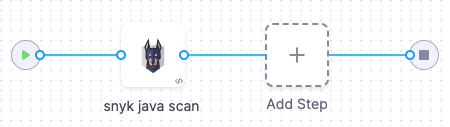

Snyk Open Source scan pipeline (orchestration)

The following illustrates the Snyk Open Source orchestration workflow example above for scanning a Java project.

YAML pipeline, Snyk Open Source scan, Orchestration mode

pipeline:

projectIdentifier: STO

orgIdentifier: default

tags: \{}

properties:

ci:

codebase:

connectorRef: CODEBASE_CONNECTOR_snyklabs

repoName: java-goof

build: <+input>

stages:

- stage:

name: scan

identifier: scan

description: ""

type: SecurityTests

spec:

cloneCodebase: true

infrastructure:

type: KubernetesDirect

spec:

connectorRef: K8S_DELEGATE_CONNECTOR

namespace: harness-delegate-ng

automountServiceAccountToken: true

nodeSelector: \{}

os: Linux

execution:

steps:

- step:

type: Snyk

name: snyk java scan

identifier: snyk_java_scan

spec:

mode: orchestration

config: default

target:

name: snyk-labs-java-goof

type: repository

variant: main

advanced:

log:

level: debug

args:

cli: "-d -X"

imagePullPolicy: Always

auth:

access_token: <+secrets.getValue("snyk_api_token")>

identifier: snyk_orchestration_doc_example

name: snyk_orchestration_doc_example

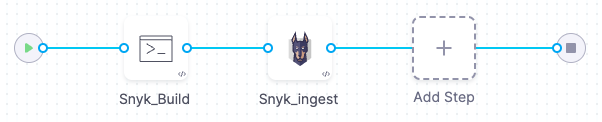

Snyk Open Source scan pipeline (ingestion)

The following illustrates the Snyk Open Source ingestion workflow example above for building and scanning a .NET image.

YAML pipeline, repository scan, Ingestion mode

pipeline:

projectIdentifier: STO

orgIdentifier: default

tags: \{}

properties:

ci:

codebase:

connectorRef: CODEBASE_CONNECTOR_Subsolution

repoName: SubSolution

build: <+input>

stages:

- stage:

name: test

identifier: test

type: SecurityTests

spec:

cloneCodebase: true

platform:

os: Linux

arch: Amd64

runtime:

type: Cloud

spec: \{}

execution:

steps:

- step:

type: Run

name: Snyk_Build

identifier: Snyk_Build

spec:

connectorRef: CONTAINER_IMAGE_REGISTRY_CONNECTOR

image: snyk/snyk:dotnet

shell: Sh

command: |

# populates the dotnet dependencies

dotnet restore SubSolution.sln

# snyk Snyk Open Source scan

# https://docs.snyk.io/snyk-cli/commands/test

snyk --file=SubSolution.sln test \

--sarif /harness > /shared/customer_artifacts/snyk_sca.json | true

snyk monitor --all-projects | true

envVariables:

SNYK_TOKEN: <+secrets.getValue("sto-api-token")>

- step:

type: Snyk

name: Snyk Snyk Code

identifier: Snyk_SAST

spec:

mode: ingestion

config: default

target:

name: snyk-scan-example-for-docs

type: repository

variant: master

advanced:

log:

level: info

ingestion:

file: /shared/scan_results/snyk_scan_results.sarif

sharedPaths:

- /shared/scan_results

variables:

identifier: snyk_ingestion_doc_example

name: snyk_ingestion_doc_example

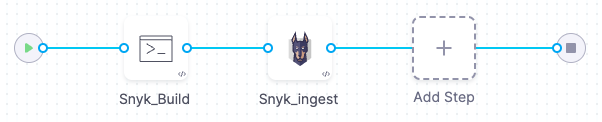

Snyk Code scan pipeline (ingestion)

The following illustrates the Snyk Code ingestion workflow example above for building and scanning a .NET image.

YAML pipeline, Snyk Code scan, Ingestion mode

pipeline:

projectIdentifier: STO

orgIdentifier: default

tags: {}

properties:

ci:

codebase:

connectorRef: Subsolution

repoName: SubSolution

build: <+input>

stages:

- stage:

name: test

identifier: test

type: SecurityTests

spec:

cloneCodebase: true

execution:

steps:

- step:

type: Run

name: Build

identifier: Build

spec:

connectorRef: DockerNoAuth

image: snyk/snyk:dotnet

shell: Sh

command: |-

# populates the dotnet dependencies

dotnet restore SubSolution.sln

# Snyk Code scan

snyk code test --sarif-file-output=/shared/scan-results/snyk_scan.sarif

cat /shared/scan-results/snyk_scan.sarif

envVariables:

SNYK_TOKEN: <+secrets.getValue("snyk_partner_account")>

- step:

type: Snyk

name: snyke_code_ingest

identifier: snyk_code_ingest

spec:

mode: ingestion

config: default

target:

type: repository

detection: manual

name: dbothwell-snyk-lab-test-code

variant: master

advanced:

log:

level: debug

imagePullPolicy: Always

ingestion:

file: /shared/scan-results/snyk_scan.sarif

when:

stageStatus: Success

sharedPaths:

- /shared/scan-results/

infrastructure:

type: KubernetesDirect

spec:

connectorRef: your_sto-delegate_connector_id

namespace: your_k8s_namespace

automountServiceAccountToken: true

nodeSelector: {}

os: Linux

slsa_provenance:

enabled: false

description: ""

identifier: snyk_code_test_example

name: snyk_code_test_example

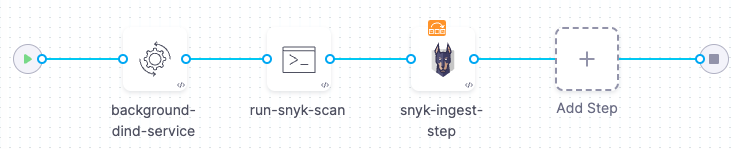

Snyk Container scan pipeline (ingestion)

The following illustrates the container image ingestion workflow example above for building and scanning a .NET image.

YAML pipeline, container image scan, Ingestion mode

pipeline:

allowStageExecutions: false

projectIdentifier: STO

orgIdentifier: default

tags: \{}

stages:

- stage:

name: scan

identifier: build

type: CI

spec:

cloneCodebase: false

infrastructure:

type: KubernetesDirect

spec:

connectorRef: K8S_DELEGATE_CONNECTOR

namespace: harness-delegate-ng

automountServiceAccountToken: true

nodeSelector: \{}

os: Linux

sharedPaths:

- /shared/scan_results/

- /var/run

execution:

steps:

- step:

type: Background

name: background-dind-service

identifier: Background_1

spec:

connectorRef: CONTAINER_IMAGE_REGISTRY_CONNECTOR

image: docker:dind

shell: Sh

entrypoint:

- dockerd

privileged: true

- step:

type: Run

name: run-snyk-scan

identifier: Run_1

spec:

connectorRef: CONTAINER_IMAGE_REGISTRY_CONNECTOR

image: snyk/snyk:docker

shell: Sh

command: |

# https://docs.snyk.io/snyk-cli/commands/container-test

# https://docs.snyk.io/scan-applications/snyk-container/snyk-cli-for-container-security/advanced-snyk-container-cli-usage

snyk container test \

snykgoof/big-goof-1g:100 -d \

--sarif-file-output=/shared/scan_results/snyk_container_scan.sarif || true

privileged: true

envVariables:

SNYK_TOKEN: <+secrets.getValue("snyk_api_token")>

isAnyParentContainerStepGroup: false

- step:

type: Snyk

name: snyk-ingest-step

identifier: Snyk_1

spec:

mode: ingestion

config: default

target:

name: snyk-goof-big-goof

type: container

variant: "100"

advanced:

log:

level: info

settings:

runner_tag: develop

imagePullPolicy: Always

ingestion:

file: /shared/scan_results/snyk_container_scan.sarif

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: Ignore

when:

stageStatus: Success

caching:

enabled: false

paths: []

variables:

- name: runner_tag

type: String

value: dev

identifier: snyk_ingest_image_docexample

name: "snyk - ingest - image - docexample "

Snyk Infrastructure as Code scan pipeline (ingestion)

The following illustrates the IAC workflow example for scanning an IaC repository.

YAML pipeline, IaC repository scan, Ingestion mode

pipeline:

allowStageExecutions: false

projectIdentifier: STO

orgIdentifier: default

tags: \{}

properties:

ci:

codebase:

connectorRef: CODEBASE_CONNECTOR_snyk_terraform_goof

build: <+input>

stages:

- stage:

name: scan

identifier: build

type: CI

spec:

cloneCodebase: true

infrastructure:

type: KubernetesDirect

spec:

connectorRef: K8S_DELEGATE_CONNECTOR

namespace: harness-delegate-ng

automountServiceAccountToken: true

nodeSelector: \{}

os: Linux

sharedPaths:

- /shared/scan_results/

execution:

steps:

- stepGroup:

name: Generation

identifier: Generation

steps:

- step:

type: Run

name: Snyk IaC Run

identifier: Run_1

spec:

connectorRef: CONTAINER_IMAGE_REGISTRY_CONNECTOR

image: snyk/snyk:linux

shell: Sh

command: |

# https://docs.snyk.io/snyk-cli/commands/iac-test

snyk iac test --sarif --sarif-file-output=/shared/scan_results/snyk_iac.json /harness || true

cat /shared/scan_results/snyk_iac.json

envVariables:

SNYK_TOKEN: <+secrets.getValue("snyk_api_token")>

isAnyParentContainerStepGroup: false

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: Abort

- step:

type: Snyk

name: Snyk ingest

identifier: Snyk_1

spec:

mode: ingestion

config: default

target:

name: snyk/terraform-goof

type: repository

variant: master

advanced:

log:

level: info

settings:

runner_tag: develop

imagePullPolicy: Always

ingestion:

file: /shared/scan_results/snyk_iac.json

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: Ignore

when:

stageStatus: Success

variables:

- name: runner_tag

type: String

value: dev

failureStrategies:

- onFailure:

errors:

- AllErrors

action:

type: Abort

identifier: IaCv2_Snyk_docexample_Clone

name: IaCv2 - Snyk - docexample - Clone